Facebook Removed 1.5 Million Videos Of New Zealand Terror Attack

As New Zealand reels from a terrorist attack against two mosques in Christchurch, Facebook announced it deleted 1.5 million videos of the shootings in the first 24 hours following the massacre.Monday, March 18th 2019, 9:37 am

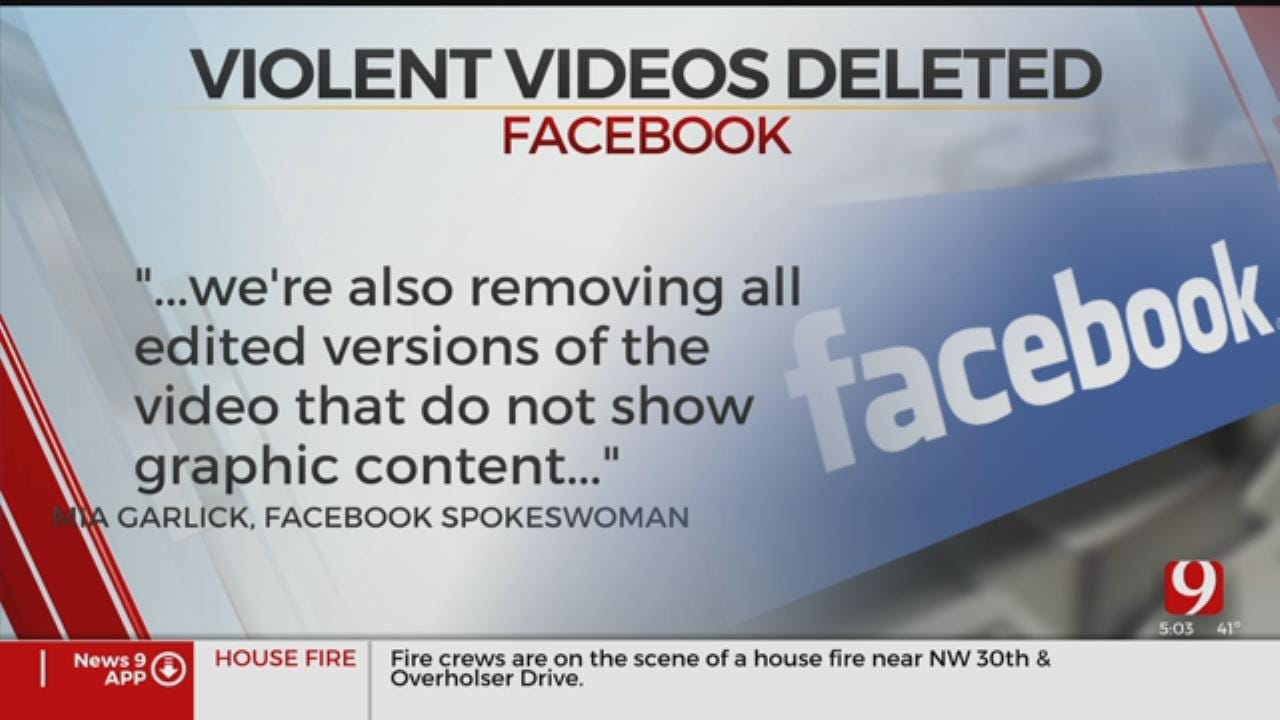

As New Zealand reels from a terrorist attack against two mosques in Christchurch, Facebook announced it deleted 1.5 million videos of the shootings in the first 24 hours following the massacre. The tech company said in a tweet late Saturday that it prevented 1.2 million videos from being uploaded to its platform, which has more than 2.2 billion global users.

However, it implies 300,000 versions of the video were available to watch for at least short periods of time before Facebook nixed them. It also reveals how quickly such provocative and graphic content circulate online and the challenges facing social media companies such as Facebook have as they try to stamp them out.

Video of the brutal attack was livestreamed on Facebook by the suspected gunman Brenton Tarrant, an Australian native who appeared in court this weekend and has been charged with murder. Tarrant is likely to face more charges when he goes in front of the Christchurch high court April 5. An online manifesto spewed a message of hate replete with references familiar to extremist chat rooms and internet trolls.

Video of the attack showed the gunman taking aim with assault-style rifles painted with symbols and quotes used widely by the white supremacist movement online.

Facebook told CNET on Friday it had removed the footage and was pulling down posts that expressed "praise or support" for the shootings not long after the shooting broke out. It said it is working with police on the investigation. In a tweet Friday from YouTube, which is owned by Google, also said it has been "working vigilantly to remove any violent footage."

This isn't the first time a crime has been livestreamed on Facebook, putting more pressure on a platform that has recently dealt with a series of scandals. Nicholas Thompson, editor-in-chief for Wired magazine, said Facebook has already hired thousands of content moderators and made using artificial intelligence to stop toxic content a higher priority, but these efforts still fall short.

"The problem is when you connect humanity, the way Facebook has done, the way other tech platforms have done, you get all of humanity and there's a lot of terrible things that happen and it gets amplified," Thompson said.

Manifesto posted online

The gunman appears to have posted a manifesto intended to reach a wide audience. CBS News investigative reporter Graham Kates told CBSN the writings could be described as "s***posting" — a common trolling tactic of posting a high volume of intentionally bad, ironic, deliberately provocative or misleading comments to distract those who aren't in the know. The manifesto includes tropes and coded references popular with members of the far-right, giving important clues. Ironic comments that only people within that community would understand are designed to evoke an emotional response.

"His manifesto had references to people and events and leaders and countries all over the world. In essence, essentially driving headlines in different places," Kates said. "Then, when his community, his friends on the internet watch this coverage around the world, they can kind of chuckle at us for falling for it."

CNET senior producer Dan Patterson said the challenge for social media platforms like Facebook and YouTube is that they were built for "speed," not safety.

"Many people have been able to kind of figure out how the algorithms work, and what causes people to click and share content," he said. "We're in a very challenging situation where these platforms were maybe not intended to be weaponized, but the facts on the ground are that many people have figured out how to weaponize and use these platforms for disinformation."

As the dominant social media platforms crack down on hatred, extremists move on to smaller outlets where anger and distrust flourish.

"There is certainly a sense of victimization by many of these people. They feel as though they're censored and targeted by media companies and by the large technology firms," Patterson said.

Ben Tracy contributed to this report.

First published on March 17, 2019 / 6:26 PM

© 2019 CBS Interactive Inc. All Rights Reserved. This material may not be published, broadcast, rewritten, or redistributed. The Associated Press contributed to this report.

More Like This

March 18th, 2019

April 15th, 2024

April 12th, 2024

March 14th, 2024

Top Headlines

April 19th, 2024

April 19th, 2024

April 19th, 2024